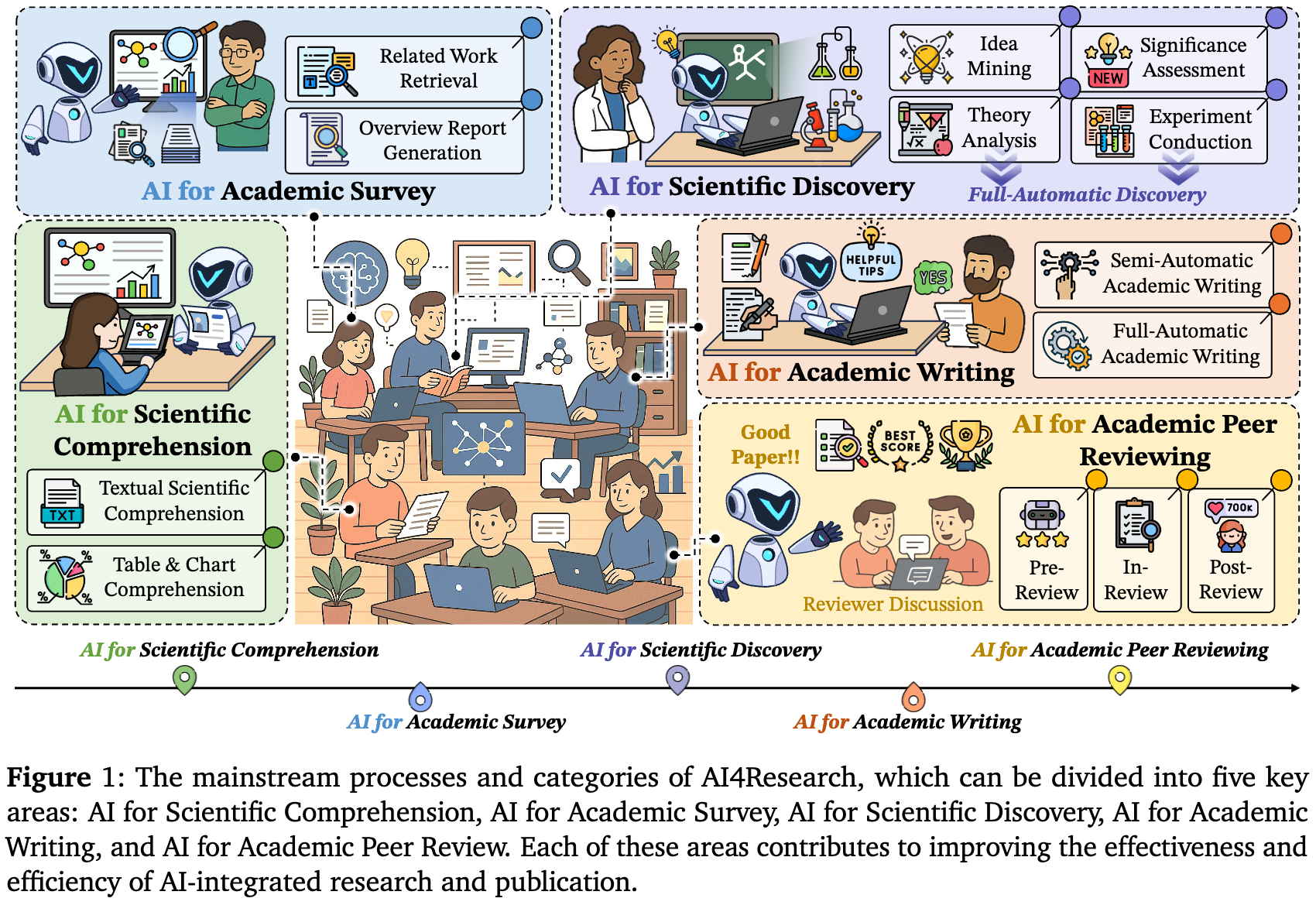

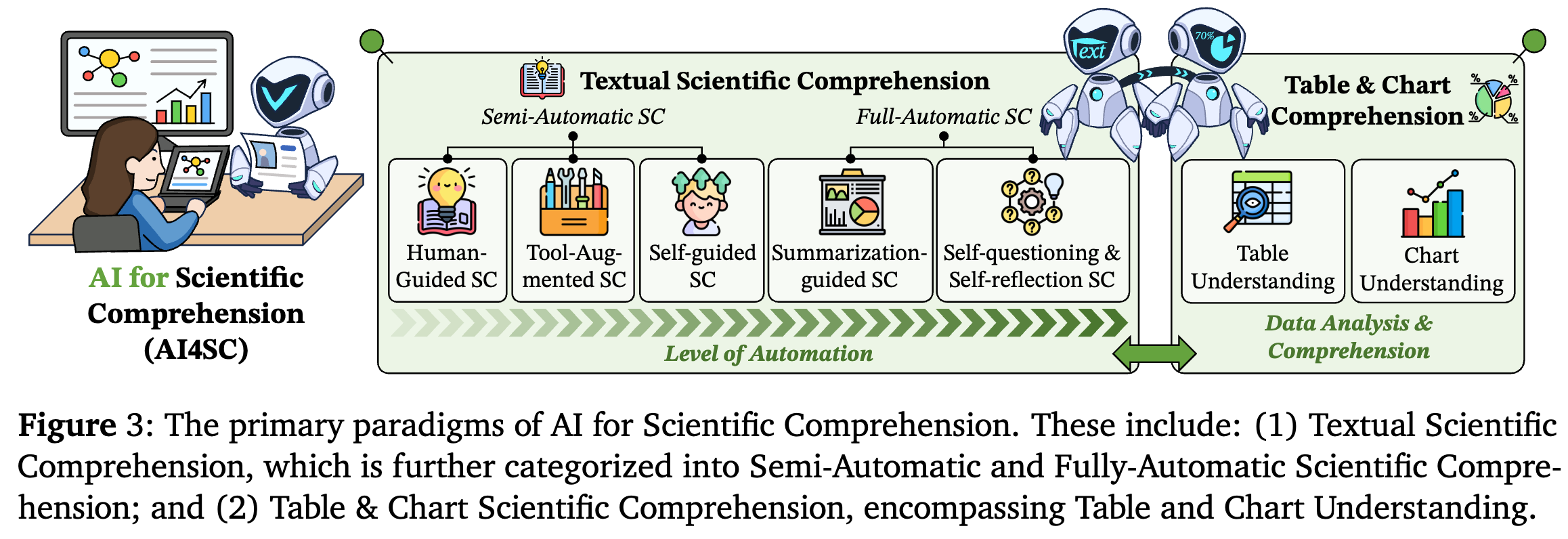

1. AI for Scientific Comprehension

1.1 Textual Scientific Comprehension

- Open-retrieval conversational question answering, Qu et al.,

- A non-factoid question-answering taxonomy, Bolotova et al.,

- How Well Do Large Language Models Extract Keywords? A Systematic

Evaluation on Scientific Corpora, Mansour et

al.,

1.1.1 Semi-Automatic Scientific Comprehension

- Scholarchemqa: Unveiling the power of language models in chemical

research question answering, Chen et al.,

- Evaluating and Training Long-Context Large Language Models for

Question Answering on Scientific Papers, Hilgert

et al.,

- Are plain language summaries more readable than scientific abstracts?

Evidence from six biomedical and life sciences

journals, Wen et al.,

- Clam: Selective clarification for ambiguous questions with generative

language models, Kuhn et al.,

- Clarify when necessary: Resolving ambiguity through interaction with

lms, Zhang et al.,

- Empowering language models with active inquiry for deeper

understanding, Pang et al.,

- Iqa-eval: Automatic evaluation of human-model interactive question

answering, Li et al.,

- The ai scientist-v2: Workshop-level automated scientific discovery via

agentic tree search, Yamada et al.,

- Truly Assessing Fluid Intelligence of Large Language Models through

Dynamic Reasoning Evaluation, Yang et al.,

- CiteWorth: Cite-Worthiness Detection for Improved Scientific Document

Understanding, Wright et al.,

- Scienceqa: A novel resource for question answering on scholarly

articles, Saikh et al.,

- Human and technological infrastructures of fact-checking,

Juneja et al.,

- Paperqa: Retrieval-augmented generative agent for scientific

research, Lala et al.,

- Efficacy analysis of online artificial intelligence fact-checking

tools, Hartley et al.,

- Language agents achieve superhuman synthesis of scientific

knowledge, Skarlinski et al.,

- Graphusion: a RAG framework for Knowledge Graph Construction with a

global perspective, Yang et al.,

- SciAgent: Tool-augmented Language Models for Scientific

Reasoning, Ma et al.,

- Hallucination Mitigation using Agentic AI Natural Language-Based

Frameworks, Gosmar et al.,

- MedBioLM: Optimizing Medical and Biological QA with Fine-Tuned Large

Language Models and Retrieval-Augmented

Generation, Kim et al.,

- Towards reasoning era: A survey of long chain-of-thought for reasoning

large language models, Chen et al.,

- Self-Critique Guided Iterative Reasoning for Multi-hop Question

Answering, Chu et al.,

- CCHall: A Novel Benchmark for Joint Cross-Lingual and Cross-Modal

Hallucinations Detection in Large Language

Models, Zhang et al.,

- Boolq: Exploring the surprising difficulty of natural yes/no

questions, Clark et al.,

- SciBERT: A Pretrained Language Model for Scientific Text,

Beltagy et al.,

- CoQUAD: a COVID-19 question answering dataset system, facilitating

research, benchmarking, and practice, Raza et

al.,

- Quaser: Question answering with scalable extractive

rationalization, Ghoshal et al.,

- Spaceqa: Answering questions about the design of space missions and

space craft concepts, Garcia-Silva et al.,

- What if: Generating code to answer simulation questions in chemistry

texts, Peretz et al.,

- Biomedlm: A 2.7 b parameter language model trained on biomedical

text, Bolton et al.,

- Scifibench: Benchmarking large multimodal models for scientific figure

interpretation, Roberts et al.,

- Scholarchemqa: Unveiling the power of language models in chemical

research question answering, Chen et al.,

- Mmsci: A dataset for graduate-level multi-discipline multimodal

scientific understanding, Li et al.,

- Multimodal ArXiv: A Dataset for Improving Scientific Comprehension of

Large Vision-Language Models, Li et al.,

- What are the essential factors in crafting effective long context

multi-hop instruction datasets? insights and best

practices, Chen et al.,

- Fine-Tuning Large Language Models for Scientific Text Classification:

A Comparative Study, Rostam et al.,

- L-CiteEval: Do Long-Context Models Truly Leverage Context for

Responding?, Tang et al.,

- Toward expert-level medical question answering with large language

models, Singhal et al.,

- A comprehensive survey on long context language modeling, Liu

et al.,

- A survey on transformer context extension: Approaches and

evaluation, Liu et al.,

- Scholarchemqa: Unveiling the power of language models in chemical

research question answering, Chen et al.,

- Evaluating and Training Long-Context Large Language Models for

Question Answering on Scientific Papers, Hilgert

et al.,

- Are plain language summaries more readable than scientific abstracts?

Evidence from six biomedical and life sciences

journals, Wen et al.,

1.1.2 Full-Automatic Scientific Comprehension

Summarization-guided Automatic Scientific Comprehension- Straight from the scientist's mouth—plain language summaries promote

laypeople's comprehension and knowledge acquisition when

reading about individual research findings in

psychology, Kerwer et al.,

- Hierarchical attention graph for scientific document summarization in

global and local level, Zhao et al.,

- Can Large Language Model Summarizers Adapt to Diverse Scientific

Communication Goals?, Fonseca et al.,

- Autonomous LLM-Driven Research—from Data to Human-Verifiable Research

Papers, Ifargan et al.,

- Large language models can self-improve, Huang et al.,

- Selfcheck: Using llms to zero-shot check their own step-by-step

reasoning, Miao et al.,

- Enabling Language Models to Implicitly Learn Self-Improvement,

Wang et al.,

- Sciglm: Training scientific language models with self-reflective

instruction annotation and tuning, Zhang et al.,

- Generating Multiple Choice Questions from Scientific Literature via

Large Language Models, Luo et al.,

- SciQAG: A Framework for Auto-Generated Science Question Answering

Dataset with Fine-grained Evaluation, Wan et

al.,

- Recursive introspection: Teaching language model agents how to

self-improve, Qu et al.,

- Mind the Gap: Examining the Self-Improvement Capabilities of Large

Language Models, Song et al.,

- FRAME: Feedback-Refined Agent Methodology for Enhancing Medical

Research Insights, Yu et al.,

- Introspective Growth: Automatically Advancing LLM Expertise in

Technology Judgment, Wu et al.,

- Open-retrieval conversational question answering, Qu et al.,

- A non-factoid question-answering taxonomy, Bolotova et al.,

- How Well Do Large Language Models Extract Keywords? A Systematic

Evaluation on Scientific Corpora, Mansour et

al.,

1.2 Table & Chart Scientific Comprehension

- How well do large language models understand tables in materials

science?, Circi et al.,

- ArxivDIGESTables: Synthesizing Scientific Literature into Tables using

Language Models, Newman et al.,

- Sciverse: Unveiling the knowledge comprehension and visual reasoning

of lmms on multi-modal scientific problems, Guo

et al.,

1.2.1 Table Understanding

- A survey on table-and-text hybridqa: Concepts, methods, challenges and

future directions, Wang et al.,

- Chain-of-Table: Evolving Tables in the Reasoning Chain for Table

Understanding, Wang et al.,

- Improving demonstration diversity by human-free fusing for

text-to-SQL, Wang et al.,

- Table Meets LLM: Can Large Language Models Understand Structured Table

Data? A Benchmark and Empirical Study, Sui et

al.,

- Multimodal Table Understanding, Zheng et al.,

- Tree-of-Table: Unleashing the Power of LLMs for Enhanced Large-Scale

Table Understanding, Ji et al.,

- Tablemaster: A recipe to advance table understanding with language

models, Cao et al.,

- A survey of table reasoning with large language models, Zhang

et al.,

- The Mighty ToRR: A Benchmark for Table Reasoning and

Robustness, Ashury-Tahan et al.,

- Tablebench: A comprehensive and complex benchmark for table question

answering, Wu et al.,

1.2.2 Chart Understanding

- Chartassisstant: A universal chart multimodal language model via

chart-to-table pre-training and multitask instruction

tuning, Meng et al.,

- SPIQA: A Dataset for Multimodal Question Answering on Scientific

Papers, Pramanick et al.,

- ChartInstruct: Instruction Tuning for Chart Comprehension and

Reasoning, Masry et al.,

- ChartAssistant: A Universal Chart Multimodal Language Model via

Chart-to-Table Pre-training and Multitask Instruction

Tuning, Meng et al.,

- SceMQA: A Scientific College Entrance Level Multimodal Question

Answering Benchmark, Liang et al.,

- Multimodal ArXiv: A Dataset for Improving Scientific Comprehension of

Large Vision-Language Models, Li et al.,

- SynChart: Synthesizing Charts from Language Models, Liu et

al.,

- NovaChart: A Large-scale Dataset towards Chart Understanding and

Generation of Multimodal Large Language Models,

Hu et al.,

- ChartGemma: Visual Instruction-tuning for Chart Reasoning in the

Wild, Masry et al.,

- ChartSketcher: Reasoning with Multimodal Feedback and Reflection for

Chart Understanding, Huang et al.,

- Multimodal DeepResearcher: Generating Text-Chart Interleaved Reports

From Scratch with Agentic Framework, Yang et

al.,

- How well do large language models understand tables in materials

science?, Circi et al.,

- ArxivDIGESTables: Synthesizing Scientific Literature into Tables using

Language Models, Newman et al.,

- Sciverse: Unveiling the knowledge comprehension and visual reasoning

of lmms on multi-modal scientific problems, Guo

et al.,

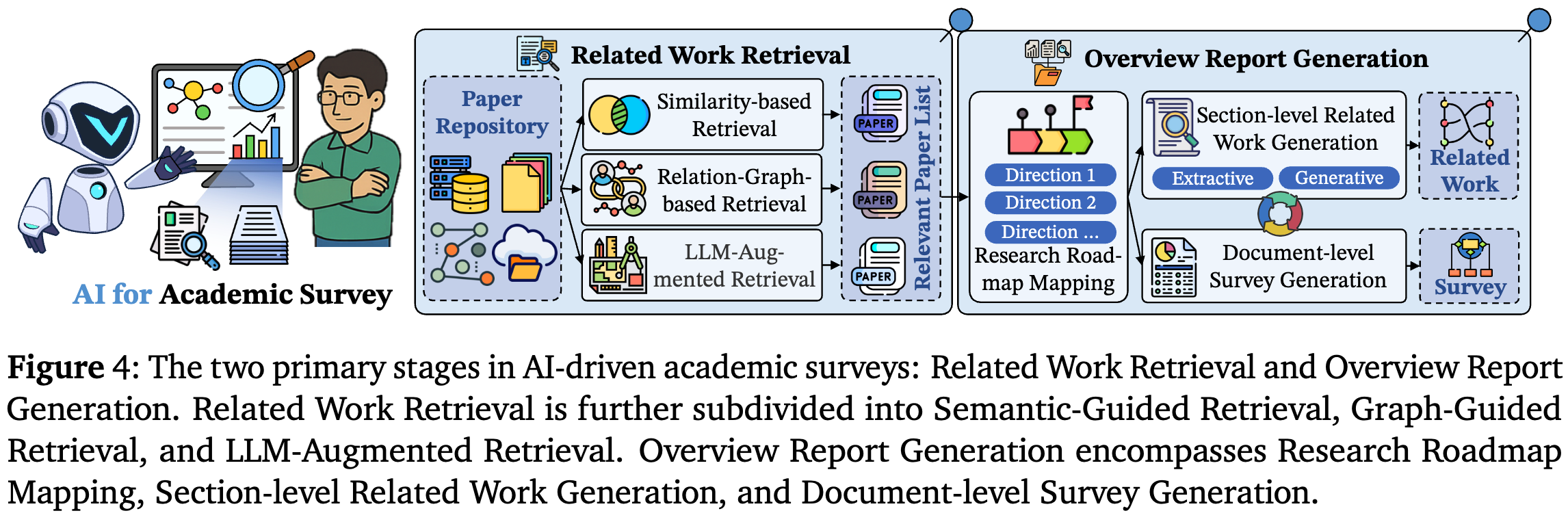

2. AI for Academic Survey

- Pre-writing: The stage of discovery in the writing process,

Rohman et al.,

2.1 Related Work Retrieval

- Paper recommender systems: a literature survey, Beel et al.,

- A Review on Personalized Academic Paper Recommendation., Li et

al.,

- Insights into relevant knowledge extraction techniques: a

comprehensive review, Shahid et al.,

- A survey on rag meeting llms: Towards retrieval-augmented large

language models, Fan et al.,

- Scientific paper recommendation: A survey, Bai et al.,

- SPLADE v2: Sparse lexical and expansion model for information

retrieval, Formal et al.,

- Scientific paper recommendation systems: a literature review of recent

publications, Kreutz et al.,

- Clinical Trial Retrieval via Multi-grained Similarity

Learning, Luo et al.,

- Related Work and Citation Text Generation: A Survey, Li et

al.,

- MIR: Methodology Inspiration Retrieval for Scientific Research

Problems, Garikaparthi et al.,

- From who you know to what you read: Augmenting scientific

recommendations with implicit social networks,

Kang et al.,

- Comlittee: Literature discovery with personal elected author

committees, Kang et al.,

- Citationsum: Citation-aware graph contrastive learning for scientific

paper summarization, Luo et al.,

- Explaining relationships among research papers, Li et al.,

- KGValidator: A Framework for Automatic Validation of Knowledge Graph

Construction, Boylan et al.,

- An academic recommender system on large citation data based on

clustering, graph modeling and deep learning,

Stergiopoulos et al.,

- ArZiGo: A recommendation system for scientific articles,

Pinedo et al.,

- Graphusion: a RAG framework for Knowledge Graph Construction with a

global perspective, Yang et al.,

- Taxonomy Tree Generation from Citation Graph, Hu et al.,

- Construction and Application of Materials Knowledge Graph in

Multidisciplinary Materials Science via Large Language

Model, Ye et al.,

- Docs2KG: A Human-LLM Collaborative Approach to Unified Knowledge Graph

Construction from Heterogeneous Documents, Sun

et al.,

- Paperweaver: Enriching topical paper alerts by contextualizing

recommended papers with user-collected papers,

Lee et al.,

- Dynamic Multi-Agent Orchestration and Retrieval for Multi-Source

Question-Answer Systems using Large Language

Models, Seabra et al.,

- Agentic Retrieval-Augmented Generation: A Survey on Agentic

RAG, Singh et al.,

- PaSa: An LLM Agent for Comprehensive Academic Paper Search, He

et al.,

- CuriousLLM: Elevating multi-document question answering with

llm-enhanced knowledge graph reasoning, Yang et

al.,

- Introducing Deep Research, {OpenAI} et al.,

- LitLLMs, LLMs for Literature Review: Are we there yet?,

Agarwal et al.,

- Select, Read, and Write: A Multi-Agent Framework of Full-Text-based

Related Work Generation, Liu et al.,

- GPT-4o Search Preview, {OpenAI} et al.,

- WebDancer: Towards Autonomous Information Seeking Agency, Wu

et al.,

- Iterative self-incentivization empowers large language models as

agentic searchers, Shi et al.,

- Multimodal DeepResearcher: Generating Text-Chart Interleaved Reports

From Scratch with Agentic Framework, Yang et

al.,

- DeepResearch Bench: A Comprehensive Benchmark for Deep Research

Agents, Du et al.,

- AcademicBrowse: Benchmarking Academic Browse Ability of LLMs,

Zhou et al.,

- Paper recommender systems: a literature survey, Beel et al.,

- A Review on Personalized Academic Paper Recommendation., Li et

al.,

- Insights into relevant knowledge extraction techniques: a

comprehensive review, Shahid et al.,

- A survey on rag meeting llms: Towards retrieval-augmented large

language models, Fan et al.,

2.2 Overview Report Generation

- Towards automated related work summarization, Hoang et al.,

2.2.1 Research Roadmap Mapping

- Hierarchical catalogue generation for literature review: a

benchmark, Zhu et al.,

- Assisting in writing wikipedia-like articles from scratch with large

language models, Shao et al.,

- Chime: Llm-assisted hierarchical organization of scientific studies

for literature review support, Hsu et al.,

- Knowledge Navigator: LLM-guided Browsing Framework for Exploratory

Search in Scientific Literature, Katz et al.,

- Understanding Survey Paper Taxonomy about Large Language Models via

Graph Representation Learning, Zhuang et al.,

- Artificial intelligence for literature reviews: Opportunities and

challenges, Bolanos et al.,

- Taxonomy Tree Generation from Citation Graph, Hu et al.,

- LLMs for Literature Review: Are we there yet?, Agarwal et al.,

- Autosurvey: Large language models can automatically write

surveys, Wang et al.,

- SurveyForge: On the Outline Heuristics, Memory-Driven Generation, and

Multi-dimensional Evaluation for Automated Survey

Writing, Yan et al.,

- Towards reasoning era: A survey of long chain-of-thought for reasoning

large language models, Chen et al.,

- Ai2 Scholar QA: Organized Literature Synthesis with

Attribution, Singh et al.,

2.2.2 Section-level Related Work Generation

- Towards automated related work summarization, Hoang et al.,

- Capturing relations between scientific papers: An abstractive model

for related work section generation, Chen et

al.,

- Target-aware abstractive related work generation with contrastive

learning, Chen et al.,

- The use of a large language model to create plain language summaries

of evidence reviews in healthcare: A feasibility

study, Ovelman et al.,

- Related Work and Citation Text Generation: A Survey, Li et

al.,

- 376 Using a large language model to create lay summaries of clinical

study descriptions, Kaiser et al.,

- Select, Read, and Write: A Multi-Agent Framework of Full-Text-based

Related Work Generation, Liu et al.,

- Towards automated related work summarization, Hoang et al.,

- Automatic generation of related work sections in scientific papers: an

optimization approach, Hu et al.,

- Neural related work summarization with a joint context-driven

attention mechanism, Wang et al.,

- Automatic generation of related work through summarizing

citations, Chen et al.,

- Toc-rwg: Explore the combination of topic model and citation

information for automatic related work

generation, Wang et al.,

- Automatic Related Work Section Generation by Sentence Extraction and

Reordering., Deng et al.,

- Neural related work summarization with a joint context-driven

attention mechanism, Wang et al.,

- Automated lay language summarization of biomedical scientific

reviews, Guo et al.,

- BACO: A background knowledge-and content-based framework for citing

sentence generation, Ge et al.,

- Capturing relations between scientific papers: An abstractive model

for related work section generation, Chen et

al.,

- Target-aware abstractive related work generation with contrastive

learning, Chen et al.,

- Multi-document scientific summarization from a knowledge graph-centric

view, Wang et al.,

- Controllable citation sentence generation with language

models, Gu et al.,

- Causal intervention for abstractive related work generation,

Liu et al.,

- Cited text spans for citation text generation, Li et al.,

- Towards a unified framework for reference retrieval and related work

generation, Shi et al.,

- Explaining relationships among research papers, Li et al.,

- Shallow synthesis of knowledge in gpt-generated texts: A case study in

automatic related work composition, Martin-Boyle

et al.,

- Related work and citation text generation: A survey, Li et

al.,

- RST-LoRA: A Discourse-Aware Low-Rank Adaptation for Long Document

Abstractive Summarization, Pu et al.,

- Reinforced Subject-Aware Graph Neural Network for Related Work

Generation, Yu et al.,

- Disentangling Instructive Information from Ranked Multiple Candidates

for Multi-Document Scientific Summarization,

Wang et al.,

- Toward Related Work Generation with Structure and Novelty

Statement, Nishimura et al.,

- Estimating Optimal Context Length for Hybrid Retrieval-augmented

Multi-document Summarization, Pratapa et al.,

- Ask, Retrieve, Summarize: A Modular Pipeline for Scientific Literature

Summarization, Achkar et al.,

- Towards automated related work summarization, Hoang et al.,

- Capturing relations between scientific papers: An abstractive model

for related work section generation, Chen et

al.,

- Target-aware abstractive related work generation with contrastive

learning, Chen et al.,

- The use of a large language model to create plain language summaries

of evidence reviews in healthcare: A feasibility

study, Ovelman et al.,

- Related Work and Citation Text Generation: A Survey, Li et

al.,

- 376 Using a large language model to create lay summaries of clinical

study descriptions, Kaiser et al.,

- Select, Read, and Write: A Multi-Agent Framework of Full-Text-based

Related Work Generation, Liu et al.,

2.2.3 Document-level Survey Generation

- Analyzing the past to prepare for the future: Writing a literature

review, Webster et al.,

- Hierarchical catalogue generation for literature review: a

benchmark, Zhu et al.,

- Bio-sieve: exploring instruction tuning large language models for

systematic review automation, Robinson et al.,

- Litllm: A toolkit for scientific literature review, Agarwal et

al.,

- Assisting in writing wikipedia-like articles from scratch with large

language models, Shao et al.,

- Artificial intelligence for literature reviews: Opportunities and

challenges, Bolanos et al.,

- Language agents achieve superhuman synthesis of scientific

knowledge, Skarlinski et al.,

- Instruct Large Language Models to Generate Scientific Literature

Survey Step by Step, Lai et al.,

- Openscholar: Synthesizing scientific literature with

retrieval-augmented lms, Asai et al.,

- Intelligent summaries: Will Artificial Intelligence mark the finale

for biomedical literature reviews?, Galli et

al.,

- Autosurvey: Large language models can automatically write

surveys, Wang et al.,

- LAG: LLM agents for Leaderboard Auto Generation on Demanding,

Wu et al.,

- SurveyX: Academic Survey Automation via Large Language Models,

Liang et al.,

- Automating research synthesis with domain-specific large language

model fine-tuning, Susnjak et al.,

- SurveyForge: On the Outline Heuristics, Memory-Driven Generation, and

Multi-dimensional Evaluation for Automated Survey

Writing, Yan et al.,

- Towards automated related work summarization, Hoang et al.,

- Pre-writing: The stage of discovery in the writing process,

Rohman et al.,

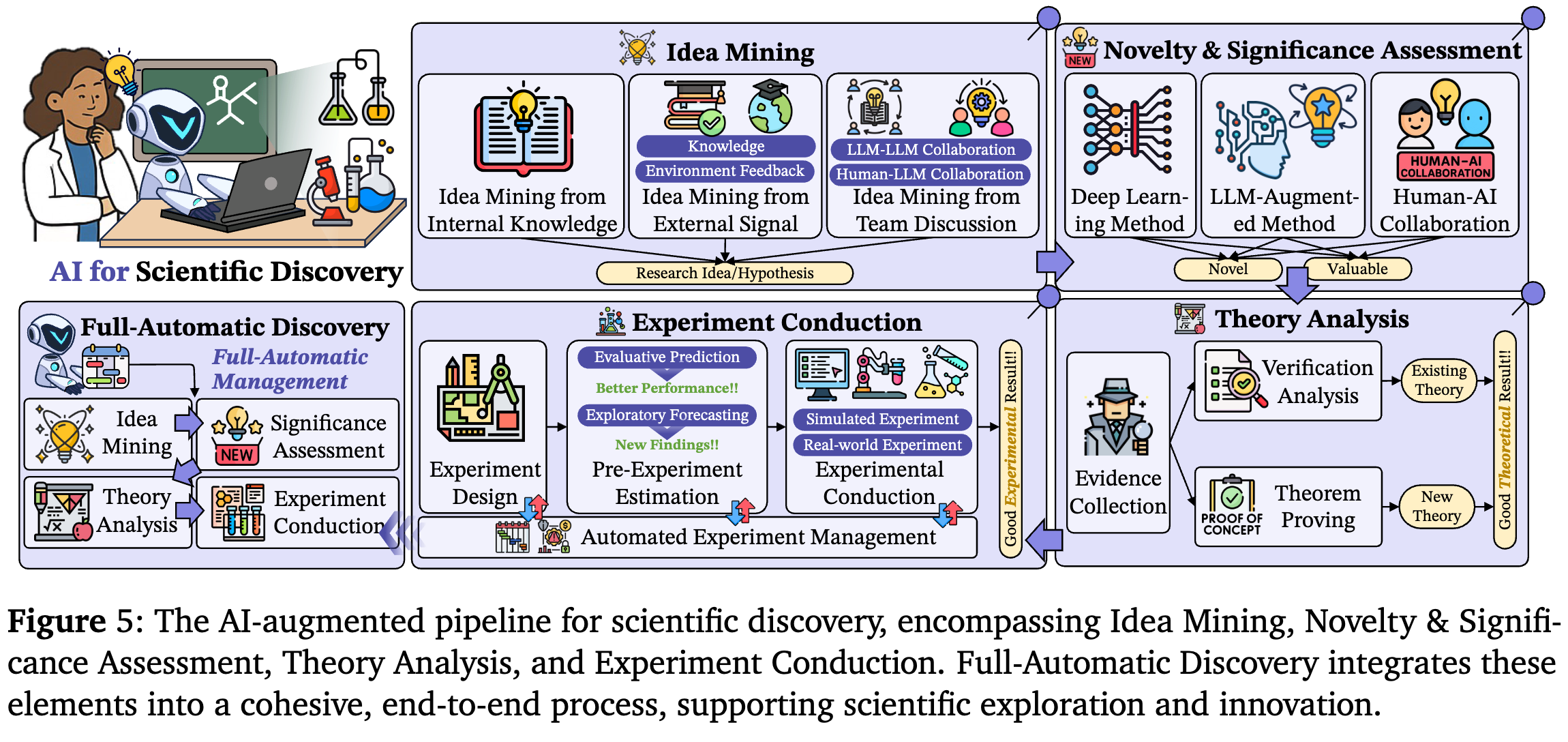

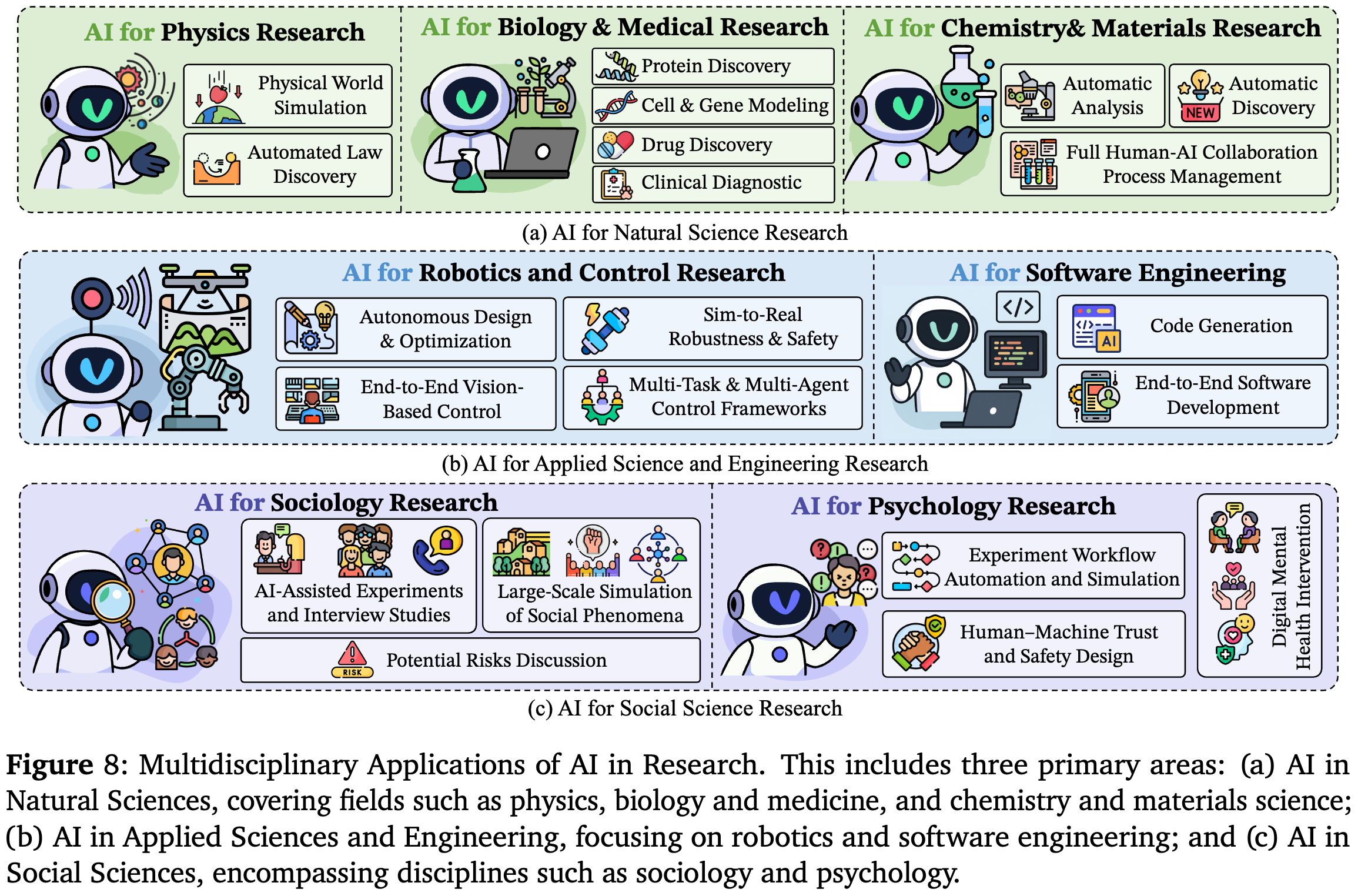

3. AI for Scientific Discovery

- Scientific discovery in the age of artificial intelligence,

Wang et al.,

- Beyond Benchmarking: Automated Capability Discovery via Model

Self-Exploration, Lu et al.,

- AIRUS: a simple workflow for AI-assisted exploration of scientific

data, Harris et al.,

- On the Rise of New Mathematical Spaces and Towards AI-Driven

Scientific Discovery, Raeini et al.,

- From Reasoning to Learning: A Survey on Hypothesis Discovery and Rule

Learning with Large Language Models, He et al.,

- AI-Driven Discovery: The Transformative Impact of Machine Learning on

Research and Development, Roy et al.,

3.1 Idea Mining

- Can Large Language Models Unlock Novel Scientific Research

Ideas?, Kumar et al.,

- Can llms generate novel research ideas? a large-scale human study with

100+ nlp researchers, Si et al.,

- LLMs can realize combinatorial creativity: generating creative ideas

via LLMs for scientific research, Gu et al.,

- Large language models for causal hypothesis generation in

science, Cohrs et al.,

- Futuregen: Llm-rag approach to generate the future work of scientific

article, Azher et al.,

- ResearchBench: Benchmarking LLMs in Scientific Discovery via

Inspiration-Based Task Decomposition, Liu et

al.,

- Sparks of science: Hypothesis generation using structured paper

data, O'Neill et al.,

- Spark: A System for Scientifically Creative Idea Generation,

Sanyal et al.,

- CHIMERA: A Knowledge Base of Idea Recombination in Scientific

Literature, Sternlicht et al.,

- Cognitio Emergens: Agency, Dimensions, and Dynamics in Human-AI

Knowledge Co-Creation, Lin et al.,

3.1.1 Idea Mining from Internal Knowledge

- Ideas are dimes a dozen: Large language models for idea generation in

innovation, Girotra et al.,

- Prompting Diverse Ideas: Increasing AI Idea Variance, Meincke

et al.,

- Using Large Language Models for Idea Generation in Innovation,

Meincke et al.,

- Can llms generate novel research ideas? a large-scale human study with

100+ nlp researchers, Si et al.,

- Can Large Language Models Unlock Novel Scientific Research

Ideas?, Kumar et al.,

- ECM: A Unified Electronic Circuit Model for Explaining the Emergence

of In-Context Learning and Chain-of-Thought in Large

Language Model, Chen et al.,

- Structuring Scientific Innovation: A Framework for Modeling and

Discovering Impactful Knowledge Combinations,

Chen et al.,

- Improving Research Idea Generation Through Data: An Empirical

Investigation in Social Science, Liu et al.,

- Enhance Innovation by Boosting Idea Generation with Large Language

Models, Haarmann et al.,

3.1.2 Idea Mining from External Signal

Idea Mining from External Knowledge- Literature based discovery: models, methods, and trends, Henry

et al.,

- Predicting the Future of AI with AI: High-quality link prediction in

an exponentially growing knowledge network,

Krenn et al.,

- A survey of large language models, Zhao et al.,

- Large language models meet nlp: A survey, Qin et al.,

- Position: data-driven discovery with large generative models,

Majumder et al.,

- Generation and human-expert evaluation of interesting research ideas

using knowledge graphs and large language

models, Gu et al.,

- Interesting scientific idea generation using knowledge graphs and

llms: Evaluations with 100 research group

leaders, Gu et al.,

- Scimon: Scientific inspiration machines optimized for novelty,

Wang et al.,

- Accelerating scientific discovery with generative knowledge

extraction, graph-based representation, and multimodal

intelligent graph reasoning, Buehler et al.,

- Literature meets data: A synergistic approach to hypothesis

generation, Liu et al.,

- Chain of ideas: Revolutionizing research via novel idea development

with llm agents, Li et al.,

- SciPIP: An LLM-based Scientific Paper Idea Proposer, Wang et

al.,

- LLMs can realize combinatorial creativity: generating creative ideas

via LLMs for scientific research, Gu et al.,

- Learning to Generate Research Idea with Dynamic Control, Li et

al.,

- Graph of AI Ideas: Leveraging Knowledge Graphs and LLMs for AI

Research Idea Generation, Gao et al.,

- Sparks of science: Hypothesis generation using structured paper

data, O'Neill et al.,

- gpt-researcher, Assafelovic et al.,

- Mlagentbench: Evaluating language agents on machine learning

experimentation, Huang et al.,

- Researchagent: Iterative research idea generation over scientific

literature with large language models, Baek et

al.,

- Augmenting large language models with chemistry tools, M. Bran

et al.,

- MatPilot: an LLM-enabled AI Materials Scientist under the Framework of

Human-Machine Collaboration, Ni et al.,

- The virtual lab: AI agents design new SARS-CoV-2 nanobodies with

experimental validation, Swanson et al.,

- Agent laboratory: Using llm agents as research assistants,

Schmidgall et al.,

- LUMI-lab: a Foundation Model-Driven Autonomous Platform Enabling

Discovery of New Ionizable Lipid Designs for mRNA

Delivery, Cui et al.,

- Towards an AI co-scientist, Gottweis et al.,

- Zochi Technical Report, AI et al.,

- AgentRxiv: Towards Collaborative Autonomous Research,

Schmidgall et al.,

- Carl Technical Report, Institute et al.,

- Ideasynth: Iterative research idea development through evolving and

composing idea facets with literature-grounded

feedback, Pu et al.,

3.1.3 Idea Mining from Team discussion

AI-AI Collaboration- Large language models for automated open-domain scientific hypotheses

discovery, Yang et al.,

- Exploring collaboration mechanisms for llm agents: A social psychology

view, Zhang et al.,

- Acceleron: A tool to accelerate research ideation, Nigam et

al.,

- Hypothesis generation with large language models, Zhou et al.,

- Researchagent: Iterative research idea generation over scientific

literature with large language models, Baek et

al.,

- Llm and simulation as bilevel optimizers: A new paradigm to advance

physical scientific discovery, Ma et al.,

- The ai scientist: Towards fully automated open-ended scientific

discovery, Lu et al.,

- Sciagents: Automating scientific discovery through multi-agent

intelligent graph reasoning, Ghafarollahi et

al.,

- Two heads are better than one: A multi-agent system has the potential

to improve scientific idea generation, Su et

al.,

- Chain of ideas: Revolutionizing research via novel idea development

with llm agents, Li et al.,

- Nova: An iterative planning and search approach to enhance novelty and

diversity of llm generated ideas, Hu et al.,

- The virtual lab: AI agents design new SARS-CoV-2 nanobodies with

experimental validation, Swanson et al.,

- AIGS: Generating Science from AI-Powered Automated

Falsification, Liu et al.,

- Large Language Models for Rediscovering Unseen Chemistry Scientific

Hypotheses, Yang et al.,

- Dolphin: Closed-loop Open-ended Auto-research through Thinking,

Practice, and Feedback, Yuan et al.,

- Multi-Novelty: Improve the Diversity and Novelty of Contents Generated

by Large Language Models via inference-time Multi-Views

Brainstorming, Lagzian et al.,

- Can Language Models Falsify? Evaluating Algorithmic Reasoning with

Counterexample Creation, Sinha et al.,

- PiFlow: Principle-aware Scientific Discovery with Multi-Agent

Collaboration, Pu et al.,

- An Interactive Co-Pilot for Accelerated Research Ideation,

Nigam et al.,

- Scideator: Human-LLM Scientific Idea Generation Grounded in

Research-Paper Facet Recombination, Radensky et

al.,

- MatPilot: an LLM-enabled AI Materials Scientist under the Framework of

Human-Machine Collaboration, Ni et al.,

- IRIS: Interactive Research Ideation System for Accelerating Scientific

Discovery, Garikaparthi et al.,

- Human creativity in the age of llms: Randomized experiments on

divergent and convergent thinking, Kumar et al.,

- Can Large Language Models Unlock Novel Scientific Research

Ideas?, Kumar et al.,

- Can llms generate novel research ideas? a large-scale human study with

100+ nlp researchers, Si et al.,

- LLMs can realize combinatorial creativity: generating creative ideas

via LLMs for scientific research, Gu et al.,

- Large language models for causal hypothesis generation in

science, Cohrs et al.,

- Futuregen: Llm-rag approach to generate the future work of scientific

article, Azher et al.,

- ResearchBench: Benchmarking LLMs in Scientific Discovery via

Inspiration-Based Task Decomposition, Liu et

al.,

- Sparks of science: Hypothesis generation using structured paper

data, O'Neill et al.,

- Spark: A System for Scientifically Creative Idea Generation,

Sanyal et al.,

- CHIMERA: A Knowledge Base of Idea Recombination in Scientific

Literature, Sternlicht et al.,

- Cognitio Emergens: Agency, Dimensions, and Dynamics in Human-AI

Knowledge Co-Creation, Lin et al.,

3.2 Novelty & Significance Assessment

- Does writing with language models reduce content diversity?,

Padmakumar et al.,

- Greater variability in judgements of the value of novel ideas,

Johnson et al.,

- How AI ideas affect the creativity, diversity, and evolution of human

ideas: evidence from a large, dynamic

experiment, Ashkinaze et al.,

- A content-based novelty measure for scholarly publications: A proof of

concept, Wang et al.,

- Art or artifice? large language models and the false promise of

creativity, Chakrabarty et al.,

- How ai processing delays foster creativity: Exploring research

question co-creation with an llm-based agent,

Liu et al.,

- Homogenization effects of large language models on human creative

ideation, Anderson et al.,

- Shared imagination: Llms hallucinate alike, Zhou et al.,

- Can llms generate novel research ideas? a large-scale human study with

100+ nlp researchers, Si et al.,

- Supporting Assessment of Novelty of Design Problems Using Concept of

Problem SAPPhIRE, Singh et al.,

- Semi-Supervised Classification With Novelty Detection Using Support

Vector Machines and Linear Discriminant

Analysis, Dove et al.,

- Can AI Examine Novelty of Patents?: Novelty Evaluation Based on the

Correspondence between Patent Claim and Prior

Art, Ikoma et al.,

- How do Humans and Language Models Reason About Creativity? A

Comparative Analysis, Laverghetta Jr et al.,

- Grapheval: A lightweight graph-based llm framework for idea

evaluation, Feng et al.,

- SCI-IDEA: Context-Aware Scientific Ideation Using Token and Sentence

Embeddings, Keya et al.,

- Enabling ai scientists to recognize innovation: A domain-agnostic

algorithm for assessing novelty, Wang et al.,

- SC4ANM: Identifying optimal section combinations for automated novelty

prediction in academic papers, Wu et al.,

3.3 Theory Analysis

3.3.1 Scientific Claim Formalization

- LF: a foundational higher-order-logic, Goodsell et al.,

- Natural Language Hypotheses in Scientific Papers and How to Tame Them:

Suggested Steps for Formalizing Complex Scientific

Claims, Heger et al.,

- Position: Multimodal Large Language Models Can Significantly Advance

Scientific Reasoning, Yan et al.,

- Sciclaimhunt: A large dataset for evidence-based scientific claim

verification, Kumar et al.,

- Towards Effective Extraction and Evaluation of Factual Claims,

Metropolitansky et al.,

- NSF-SciFy: Mining the NSF Awards Database for Scientific

Claims, Rao et al.,

- Grammars of Formal Uncertainty: When to Trust LLMs in Automated

Reasoning Tasks, Ganguly et al.,

- Valsci: an open-source, self-hostable literature review utility for

automated large-batch scientific claim verification

using large language models, Edelman et al.,

3.3.2 Scientific Evidence Collection

- MultiVerS: Improving scientific claim verification with weak

supervision and full-document context, Wadden et

al.,

- Missing counter-evidence renders NLP fact-checking unrealistic for

misinformation, Glockner et al.,

- Investigating zero-and few-shot generalization in fact

verification, Pan et al.,

- Comparing knowledge sources for open-domain scientific claim

verification, Vladika et al.,

- Understanding Fine-grained Distortions in Reports of Scientific

Findings, W{\"u}hrl et al.,

- Improving health question answering with reliable and time-aware

evidence retrieval, Vladika et al.,

- Zero-shot scientific claim verification using LLMs and citation

text, Alvarez et al.,

- Grounding fallacies misrepresenting scientific publications in

evidence, Glockner et al.,

- Can foundation models actively gather information in interactive

environments to test hypotheses?, Ke et al.,

- LLM-based Corroborating and Refuting Evidence Retrieval for Scientific

Claim Verification, Wang et al.,

- SciClaims: An End-to-End Generative System for Biomedical Claim

Analysis, Ortega et al.,

3.3.3 Scientific Verification Analysis

- Proofver: Natural logic theorem proving for fact verification,

Krishna et al.,

- The state of human-centered NLP technology for fact-checking,

Das et al.,

- aedFaCT: Scientific Fact-Checking Made Easier via Semi-Automatic

Discovery of Relevant Expert Opinions, Altuncu

et al.,

- FactKG: Fact verification via reasoning on knowledge graphs,

Kim et al.,

- Fact-checking complex claims with program-guided reasoning,

Pan et al.,

- Prompt to be consistent is better than self-consistent? few-shot and

zero-shot fact verification with pre-trained language

models, Zeng et al.,

- Unsupervised Pretraining for Fact Verification by Language Model

Distillation, Bazaga et al.,

- Towards llm-based fact verification on news claims with a hierarchical

step-by-step prompting method, Zhang et al.,

- Characterizing and Verifying Scientific Claims: Qualitative Causal

Structure is All You Need, Wu et al.,

- Can Large Language Models Detect Misinformation in Scientific News

Reporting?, Cao et al.,

- What makes medical claims (un) verifiable? analyzing entity and

relation properties for fact verification,

W{\"u}hrl et al.,

- ClaimVer: Explainable claim-level verification and evidence

attribution of text through knowledge graphs,

Dammu et al.,

- Generating fact checking explanations, Atanasova et al.,

- MAGIC: Multi-Argument Generation with Self-Refinement for Domain

Generalization in Automatic Fact-Checking, Kao

et al.,

- Robust Claim Verification Through Fact Detection, Jafari et

al.,

- Automated justification production for claim veracity in fact

checking: A survey on architectures and

approaches, Eldifrawi et al.,

- Enhancing natural language inference performance with knowledge graph

for COVID-19 automated fact-checking in Indonesian

language, Muharram et al.,

- Augmenting the Veracity and Explanations of Complex Fact Checking via

Iterative Self-Revision with LLMs, Zhang et al.,

- DEFAME: Dynamic Evidence-based FAct-checking with Multimodal

Experts, Braun et al.,

- TheoremExplainAgent: Towards Video-based Multimodal Explanations for

LLM Theorem Understanding, Ku et al.,

- Explainable Biomedical Claim Verification with Large Language

Models, Liang et al.,

- Language Agents Mirror Human Causal Reasoning Biases. How Can We Help

Them Think Like Scientists?, GX-Chen et al.,

3.3.4 Theorem Proving

- Generative language modeling for automated theorem proving,

Polu et al.,

- Draft, sketch, and prove: Guiding formal theorem provers with informal

proofs, Jiang et al.,

- Hypertree proof search for neural theorem proving, Lample et

al.,

- Thor: Wielding hammers to integrate language models and automated

theorem provers, Jiang et al.,

- Decomposing the enigma: Subgoal-based demonstration learning for

formal theorem proving, Zhao et al.,

- Dt-solver: Automated theorem proving with dynamic-tree sampling guided

by proof-level value function, Wang et al.,

- Lego-prover: Neural theorem proving with growing libraries,

Wang et al.,

- Baldur: Whole-proof generation and repair with large language

models, First et al.,

- Mustard: Mastering uniform synthesis of theorem and proof

data, Huang et al.,

- A survey on deep learning for theorem proving, Li et al.,

- Towards large language models as copilots for theorem proving in

lean, Song et al.,

- Proving theorems recursively, Wang et al.,

- Deepseek-prover: Advancing theorem proving in llms through large-scale

synthetic data, Xin et al.,

- Lean-star: Learning to interleave thinking and proving, Lin et

al.,

- Data for mathematical copilots: Better ways of presenting proofs for

machine learning, Frieder et al.,

- Deep Active Learning based Experimental Design to Uncover Synergistic

Genetic Interactions for Host Targeted

Therapeutics, Zhu et al.,

- Discovering Symbolic Differential Equations with Symmetry

Invariants, Yang et al.,

3.4 Scientific Experiment Conduction

- Toward machine learning optimization of experimental design,

Baydin et al.,

- AI-assisted design of experiments at the frontiers of computation:

methods and new perspectives, Vischia et al.,

- AI-Driven Automation Can Become the Foundation of Next-Era Science of

Science Research, Chen et al.,

- EXP-Bench: Can AI Conduct AI Research Experiments?, Kon et

al.,

- AI Scientists Fail Without Strong Implementation Capability,

Zhu et al.,

3.4.1 Experiment Design

- Augmenting large language models with chemistry tools, M. Bran

et al.,

- Sciagents: Automating scientific discovery through multi-agent

intelligent graph reasoning, Ghafarollahi et

al.,

- MatPilot: an LLM-enabled AI Materials Scientist under the Framework of

Human-Machine Collaboration, Ni et al.,

- AI-assisted design of experiments at the frontiers of computation:

methods and new perspectives, Vischia et al.,

- LUMI-lab: a Foundation Model-Driven Autonomous Platform Enabling

Discovery of New Ionizable Lipid Designs for mRNA

Delivery, Cui et al.,

- Towards an AI co-scientist, Gottweis et al.,

- AI-assisted inverse design of sequence-ordered high intrinsic thermal

conductivity polymers, Huang et al.,

- Augmenting large language models with chemistry tools, M. Bran

et al.,

- Meta-Designing Quantum Experiments with Language Models, Arlt

et al.,

- MatPilot: an LLM-enabled AI Materials Scientist under the Framework of

Human-Machine Collaboration, Ni et al.,

- The application of artificial intelligence-assisted technology in

cultural and creative product design, Liang et

al.,

- A Human-LLM Note-Taking System with Case-Based Reasoning as Framework

for Scientific Discovery, Craig et al.,

- Researchagent: Iterative research idea generation over scientific

literature with large language models, Baek et

al.,

- Biodiscoveryagent: An ai agent for designing genetic perturbation

experiments, Roohani et al.,

- The ai scientist: Towards fully automated open-ended scientific

discovery, Lu et al.,

- The virtual lab: AI agents design new SARS-CoV-2 nanobodies with

experimental validation, Swanson et al.,

- Large Language Model Assisted Experiment Design with Generative

Human-Behavior Agents, Liu et al.,

- Agent laboratory: Using llm agents as research assistants,

Schmidgall et al.,

- Carl Technical Report, Institute et al.,

- Zochi Technical Report, AI et al.,

- AgentRxiv: Towards Collaborative Autonomous Research,

Schmidgall et al.,

- Robin: A multi-agent system for automating scientific

discovery, Ghareeb et al.,

- Augmenting large language models with chemistry tools, M. Bran

et al.,

- Sciagents: Automating scientific discovery through multi-agent

intelligent graph reasoning, Ghafarollahi et

al.,

- MatPilot: an LLM-enabled AI Materials Scientist under the Framework of

Human-Machine Collaboration, Ni et al.,

- AI-assisted design of experiments at the frontiers of computation:

methods and new perspectives, Vischia et al.,

- LUMI-lab: a Foundation Model-Driven Autonomous Platform Enabling

Discovery of New Ionizable Lipid Designs for mRNA

Delivery, Cui et al.,

- Towards an AI co-scientist, Gottweis et al.,

3.4.2 Pre-Experiment Estimation

Evaluative Prediction- DeepCRE: Transforming Drug R&D via AI-Driven Cross-drug Response

Evaluation, Wu et al.,

- Physical formula enhanced multi-task learning for pharmacokinetics

prediction, Li et al.,

- MASSW: A new dataset and benchmark tasks for ai-assisted scientific

workflows, Zhang et al.,

- Unimatch: Universal matching from atom to task for few-shot drug

discovery, Li et al.,

- LUMI-lab: a Foundation Model-Driven Autonomous Platform Enabling

Discovery of New Ionizable Lipid Designs for mRNA

Delivery, Cui et al.,

- Predicting Empirical AI Research Outcomes with Language

Models, Wen et al.,

- Large language models surpass human experts in predicting neuroscience

results, Luo et al.,

- Automatic chemical design using a data-driven continuous

representation of molecules,

G{\'o}mez-Bombarelli et al.,

- MolGAN: An implicit generative model for small molecular

graphs, De Cao et al.,

- Google DeepMind's AI Dreamed Up 380,000 New Materials. The Next

Challenge Is Making Them, Barber et al.,

- Augmenting large language models with chemistry tools, M. Bran

et al.,

- MASSW: A new dataset and benchmark tasks for ai-assisted scientific

workflows, Zhang et al.,

- The virtual lab: AI agents design new SARS-CoV-2 nanobodies with

experimental validation, Swanson et al.,

- Towards an AI co-scientist, Gottweis et al.,

- FlavorDiffusion: Modeling Food-Chemical Interactions with

Diffusion, Seo et al.,

- MOOSE-Chem3: Toward Experiment-Guided Hypothesis Ranking via Simulated

Experimental Feedback, Liu et al.,

3.4.3 Experiment Management

- Transforming science labs into automated factories of

discovery, Angelopoulos et al.,

- Development of an Automated Workflow for Screening the Assembly and

Host--Guest Behavior of Metal-Organic Cages Towards

Accelerated Discovery, Basford et al.,

- AI Driven Experiment Calibration and Control, Britton et al.,

- Agents for self-driving laboratories applied to quantum

computing, Cao et al.,

- Intelligent experiments through real-time AI: Fast Data Processing and

Autonomous Detector Control for sPHENIX and future EIC

detectors, Kvapil et al.,

- Artificial intelligence meets laboratory automation in discovery and

synthesis of metal--organic frameworks: A

review, Zhao et al.,

- Agents for Change: Artificial Intelligent Workflows for Quantitative

Clinical Pharmacology and Translational

Sciences, Shahin et al.,

- Science acceleration and accessibility with self-driving labs,

Canty et al.,

- Accelerating drug discovery with Artificial: a whole-lab orchestration

and scheduling system for self-driving labs,

Fehlis et al.,

- Uncovering Bottlenecks and Optimizing Scientific Lab Workflows with

Cycle Time Reduction Agents, Fehlis et al.,

- Perspective on Utilizing Foundation Models for Laboratory Automation

in Materials Research, Hatakeyama-Sato et al.,

- The future of self-driving laboratories: from human in the loop

interactive AI to gamification, Hysmith et al.,

- Self-driving labs are the new AI asset, {Axios} et al.,

- DeepMind and BioNTech build AI lab assistants for scientific

research, Times} et al.,

- Autonomous platform for solution processing of electronic

polymers, Wang et al.,

- Machine learning-led semi-automated medium optimization reveals salt

as key for flaviolin production in Pseudomonas

putida, Zournas et al.,

- Functional genomic hypothesis generation and experimentation by a

robot scientist, King et al.,

- Self-driving laboratory for accelerated discovery of thin-film

materials, MacLeod et al.,

- Self-driving laboratories for chemistry and materials science,

Tom et al.,

- Autonomous platform for solution processing of electronic

polymers, Wang et al.,

- Self-driving laboratory platform for many-objective self-optimisation

of polymer nanoparticle synthesis with cloud-integrated

machine learning and orthogonal online

analytics, Knox et al.,

- Transforming science labs into automated factories of

discovery, Angelopoulos et al.,

- Development of an Automated Workflow for Screening the Assembly and

Host--Guest Behavior of Metal-Organic Cages Towards

Accelerated Discovery, Basford et al.,

- AI Driven Experiment Calibration and Control, Britton et al.,

- Agents for self-driving laboratories applied to quantum

computing, Cao et al.,

- Intelligent experiments through real-time AI: Fast Data Processing and

Autonomous Detector Control for sPHENIX and future EIC

detectors, Kvapil et al.,

- Artificial intelligence meets laboratory automation in discovery and

synthesis of metal--organic frameworks: A

review, Zhao et al.,

- Agents for Change: Artificial Intelligent Workflows for Quantitative

Clinical Pharmacology and Translational

Sciences, Shahin et al.,

- Science acceleration and accessibility with self-driving labs,

Canty et al.,

- Accelerating drug discovery with Artificial: a whole-lab orchestration

and scheduling system for self-driving labs,

Fehlis et al.,

- Uncovering Bottlenecks and Optimizing Scientific Lab Workflows with

Cycle Time Reduction Agents, Fehlis et al.,

- Perspective on Utilizing Foundation Models for Laboratory Automation

in Materials Research, Hatakeyama-Sato et al.,

3.4.4 Experimental Conduction

Automated Machine Learning Experiment Conduction- AIDE: Human-Level Performance on Data Science Competitions,

Dominik et al.,

- Automl-gpt: Automatic machine learning with gpt, Zhang et al.,

- Automl in the age of large language models: Current challenges, future

opportunities and risks, Tornede et al.,

- Opendevin: An open platform for ai software developers as generalist

agents, Wang et al.,

- Mlr-copilot: Autonomous machine learning research based on large

language models agents, Li et al.,

- Autokaggle: A multi-agent framework for autonomous data science

competitions, Li et al.,

- Large language models orchestrating structured reasoning achieve

kaggle grandmaster level, Grosnit et al.,

- MLRC-Bench: Can Language Agents Solve Machine Learning Research

Challenges?, Zhang et al.,

- AutoReproduce: Automatic AI Experiment Reproduction with Paper

Lineage, Zhao et al.,

- Variable Extraction for Model Recovery in Scientific

Literature, Liu et al.,

- AlphaEvolve: A coding agent for scientific and algorithmic

discovery, Novikov et al.,

- Large language models can self-improve, Huang et al.,

- Mlcopilot: Unleashing the power of large language models in solving

machine learning tasks, Zhang et al.,

- Training socially aligned language models in simulated human

society, Liu et al.,

- Toolllm: Facilitating large language models to master 16000+

real-world apis, Qin et al.,

- An autonomous laboratory for the accelerated synthesis of novel

materials, Szymanski et al.,

- Autonomous chemical research with large language models, Boiko

et al.,

- Reflexion: Language agents with verbal reinforcement learning,

Shinn et al.,

- Toolkengpt: Augmenting frozen language models with massive tools via

tool embeddings, Hao et al.,

- Toolformer: Language models can teach themselves to use tools,

Schick et al.,

- scGPT: toward building a foundation model for single-cell multi-omics

using generative AI, Cui et al.,

- Large language model agent for hyper-parameter optimization,

Liu et al.,

- MechAgents: Large language model multi-agent collaborations can solve

mechanics problems, generate new data, and integrate

knowledge, Ni et al.,

- Researchagent: Iterative research idea generation over scientific

literature with large language models, Baek et

al.,

- Automated social science: Language models as scientist and

subjects, Manning et al.,

- Crispr-gpt: An llm agent for automated design of gene-editing

experiments, Huang et al.,

- Position: LLMs can’t plan, but can help planning in LLM-modulo

frameworks, Kambhampati et al.,

- Augmenting large language models with chemistry tools, M. Bran

et al.,

- Mlr-copilot: Autonomous machine learning research based on large

language models agents, Li et al.,

- The ai scientist: Towards fully automated open-ended scientific

discovery, Lu et al.,

- Sciagents: Automating scientific discovery through multi-agent

intelligent graph reasoning, Ghafarollahi et

al.,

- Wrong-of-thought: An integrated reasoning framework with

multi-perspective verification and wrong

information, Zhang et al.,

- Simulating Tabular Datasets through LLMs to Rapidly Explore Hypotheses

about Real-World Entities, Zabaleta et al.,

- An automatic end-to-end chemical synthesis development platform

powered by large language models, Ruan et al.,

- MatPilot: an LLM-enabled AI Materials Scientist under the Framework of

Human-Machine Collaboration, Ni et al.,

- Towards LLM-Driven Multi-Agent Pipeline for Drug Discovery:

Neurodegenerative Diseases Case Study, Solovev

et al.,

- From Individual to Society: A Survey on Social Simulation Driven by

Large Language Model-based Agents, Mou et al.,

- On Evaluating LLMs' Capabilities as Functional Approximators: A

Bayesian Evaluation Framework, Siddiqui et al.,

- PSYCHE: A Multi-faceted Patient Simulation Framework for Evaluation of

Psychiatric Assessment Conversational Agents,

Lee et al.,

- Dolphin: Closed-loop Open-ended Auto-research through Thinking,

Practice, and Feedback, Yuan et al.,

- DLPO: Towards a Robust, Efficient, and Generalizable Prompt

Optimization Framework from a Deep-Learning

Perspective, Peng et al.,

- Simulating cooperative prosocial behavior with multi-agent LLMs:

Evidence and mechanisms for AI agents to inform policy

decisions, Sreedhar et al.,

- Reinforcing clinical decision support through multi-agent systems and

ethical ai governance, Chen et al.,

- OpenFOAMGPT 2.0: end-to-end, trustworthy automation for computational

fluid dynamics, Feng et al.,

- Researchcodeagent: An llm multi-agent system for automated

codification of research methodologies, Gandhi

et al.,

- The ai scientist-v2: Workshop-level automated scientific discovery via

agentic tree search, Yamada et al.,

- MooseAgent: A LLM Based Multi-agent Framework for Automating Moose

Simulation, Zhang et al.,

- Owl: Optimized workforce learning for general multi-agent assistance

in real-world task automation, Hu et al.,

3.4.5 Experimental Analysis

Automated Evaluation Metrics- Eight years of AutoML: categorisation, review and trends,

Barbudo et al.,

- Efficient bayesian learning curve extrapolation using prior-data

fitted networks, Adriaensen et al.,

- Automated machine learning: past, present and future, Baratchi

et al.,

- Variable Extraction for Model Recovery in Scientific

Literature, Liu et al.,

- AutoReproduce: Automatic AI Experiment Reproduction with Paper

Lineage, Zhao et al.,

- HeLM: Highlighted Evidence augmented Language Model for Enhanced

Table-to-Text Generation, Bian et al.,

- Table meets llm: Can large language models understand structured table

data? a benchmark and empirical study, Sui et

al.,

- Table-LLM-Specialist: Language Model Specialists for Tables using

Iterative Generator-Validator Fine-tuning, Xing

et al.,

- LLM Based Exploratory Data Analysis Using BigQuery Data

Canvas, Chaudhuri et al.,

- Toward machine learning optimization of experimental design,

Baydin et al.,

- AI-assisted design of experiments at the frontiers of computation:

methods and new perspectives, Vischia et al.,

- AI-Driven Automation Can Become the Foundation of Next-Era Science of

Science Research, Chen et al.,

- EXP-Bench: Can AI Conduct AI Research Experiments?, Kon et

al.,

- AI Scientists Fail Without Strong Implementation Capability,

Zhu et al.,

3.5 Full-Automatic Discovery

- The ai scientist: Towards fully automated open-ended scientific

discovery, Lu et al.,

- Aviary: training language agents on challenging scientific

tasks, Narayanan et al.,

- Dolphin: Closed-loop Open-ended Auto-research through Thinking,

Practice, and Feedback, Yuan et al.,

- Autonomous Microscopy Experiments through Large Language Model

Agents, Mandal et al.,

- Agent laboratory: Using llm agents as research assistants,

Schmidgall et al.,

- Curie: Toward rigorous and automated scientific experimentation with

ai agents, Kon et al.,

- DORA AI Scientist: Multi-agent Virtual Research Team for Scientific

Exploration Discovery and Automated Report

Generation, Naumov et al.,

- Carl Technical Report, Institute et al.,

- AgentRxiv: Towards Collaborative Autonomous Research,

Schmidgall et al.,

- Zochi Technical Report, AI et al.,

- NovelSeek: When Agent Becomes the Scientist--Building Closed-Loop

System from Hypothesis to Verification, Team et

al.,

- AutoSDT: Scaling Data-Driven Discovery Tasks Toward Open

Co-Scientists, Li et al.,

- VISION: A modular AI assistant for natural human-instrument

interaction at scientific user facilities,

Mathur et al.,

- Scientific discovery in the age of artificial intelligence,

Wang et al.,

- Beyond Benchmarking: Automated Capability Discovery via Model

Self-Exploration, Lu et al.,

- AIRUS: a simple workflow for AI-assisted exploration of scientific

data, Harris et al.,

- On the Rise of New Mathematical Spaces and Towards AI-Driven

Scientific Discovery, Raeini et al.,

- From Reasoning to Learning: A Survey on Hypothesis Discovery and Rule

Learning with Large Language Models, He et al.,

- AI-Driven Discovery: The Transformative Impact of Machine Learning on

Research and Development, Roy et al.,

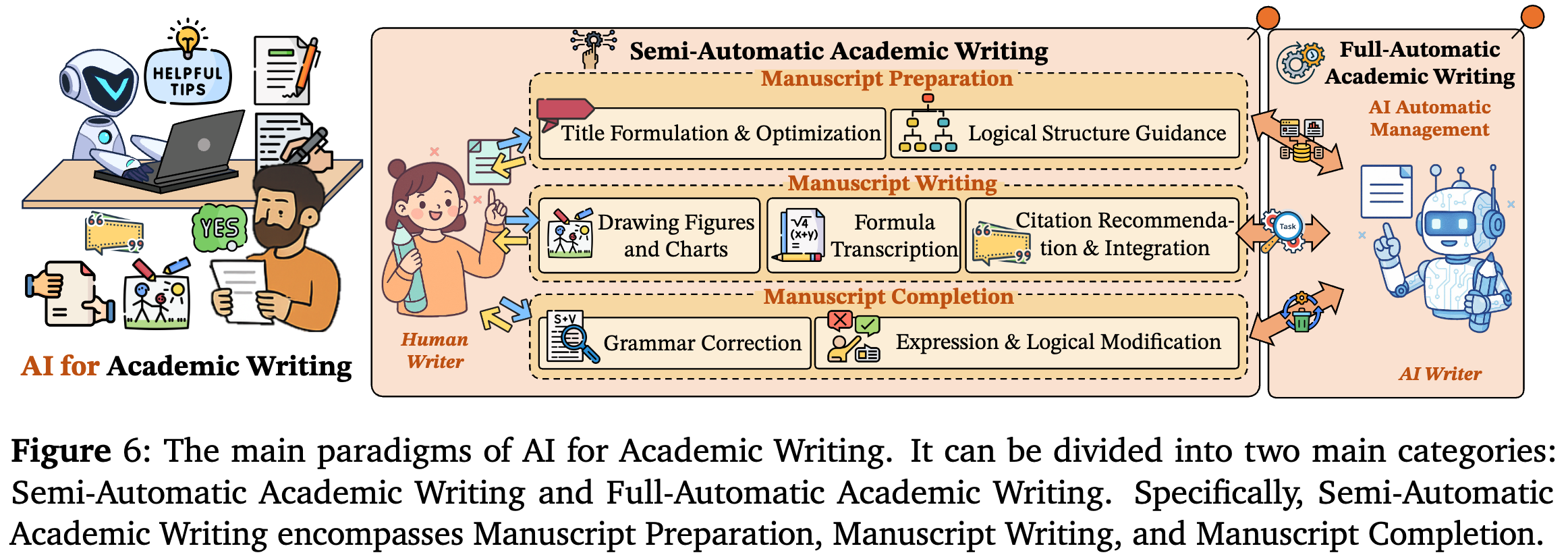

4. AI for Academic Writing

- Using artificial intelligence in academic writing and research: An

essential productivity tool, Khalifa et al.,

- Human-LLM Coevolution: Evidence from Academic Writing, Geng et

al.,

- Large language models penetration in scholarly writing and peer

review, Zhou et al.,

- And Plato met ChatGPT: an ethical reflection on the use of chatbots in

scientific research writing, with a particular focus on

the social sciences, Calderon et al.,

4.1 Semi-Automatic Academic Writing

4.1.1 Assistance During Manuscript Preparation

Title Formulation and Optimization- Personalized Graph-Based Retrieval for Large Language Models,

Au et al.,

- Generating Accurate and Engaging Research Paper Titles Using NLP

Techniques, Bikku et al.,

- MoDeST: A dataset for Multi Domain Scientific Title

Generation, B{\"o}l{\"u}c{\"u} et al.,

- Can pre-trained language models generate titles for research

papers?, Rehman et al.,

- LalaEval: A Holistic Human Evaluation Framework for Domain-Specific

Large Language Models, Sun et al.,

- LLM-Rubric: A Multidimensional, Calibrated Approach to Automated

Evaluation of Natural Language Texts, Hashemi et

al.,

4.1.2 Assistance During Manuscript Writing

- Enhancing academic writing skills and motivation: assessing the

efficacy of ChatGPT in AI-assisted language learning for

EFL students, Song et al.,

- Human-AI collaboration patterns in AI-assisted academic

writing, Nguyen et al.,

- Patterns and Purposes: A Cross-Journal Analysis of AI Tool Usage in

Academic Writing, Xu et al.,

- Divergent llm adoption and heterogeneous convergence paths in research

writing, Lin et al.,

- Artificial intelligence-assisted academic writing: recommendations for

ethical use, Cheng et al.,

- Text2chart: A multi-staged chart generator from natural language

text, Rashid et al.,

- ChartReader: A unified framework for chart derendering and

comprehension without heuristic rules, Cheng et

al.,

- Figgen: Text to scientific figure generation, Rodriguez et

al.,

- Automatikz: Text-guided synthesis of scientific vector graphics with

tikz, Belouadi et al.,

- Scicapenter: Supporting caption composition for scientific figures

with machine-generated captions and ratings, Hsu

et al.,

- ChartFormer: A large vision language model for converting chart images

into tactile accessible SVGs, Moured et al.,

- Figuring out Figures: Using Textual References to Caption Scientific

Figures, Cao et al.,

- The ai scientist: Towards fully automated open-ended scientific

discovery, Lu et al.,

- AiSciVision: A Framework for Specializing Large Multimodal Models in

Scientific Image Classification, Hogan et al.,

- ScImage: How Good Are Multimodal Large Language Models at Scientific

Text-to-Image Generation?, Zhang et al.,

- Chartcoder: Advancing multimodal large language model for

chart-to-code generation, Zhao et al.,

- Understanding How Paper Writers Use AI-Generated Captions in Figure

Caption Writing, Yin et al.,

- Multi-LLM Collaborative Caption Generation in Scientific

Documents, Kim et al.,

- TikZero: Zero-Shot Text-Guided Graphics Program Synthesis,

Belouadi et al.,

- Enhancing Chart-to-Code Generation in Multimodal Large Language Models

via Iterative Dual Preference Learning, Zhang et

al.,

- StarVector: Generating scalable vector graphics code from images and

text, Rodriguez et al.,

- The ai scientist-v2: Workshop-level automated scientific discovery via

agentic tree search, Yamada et al.,

- How to Create Accurate Scientific Illustrations with AI in

2025, Team et al.,

- Towards Semantic Markup of Mathematical Documents via User

Interaction, Vre{\v{c}}ar et al.,

- Automated LaTeX Code Generation from Handwritten Math Expressions

Using Vision Transformer, Sundararaj et al.,

- LATTE: Improving Latex Recognition for Tables and Formulae with

Iterative Refinement, Jiang et al.,

- Chronological citation recommendation with time preference, Ma

et al.,

- When large language models meet citation: A survey, Zhang et

al.,

- Directed Criteria Citation Recommendation and Ranking Through Link

Prediction, Watson et al.,

- ILCiteR: Evidence-grounded Interpretable Local Citation

Recommendation, Roy et al.,

- CiteBART: Learning to Generate Citations for Local Citation

Recommendation, {\c{C}}elik et al.,

- Benchmark for Evaluation and Analysis of Citation Recommendation

Models, Maharjan et al.,

- PaSa: An LLM Agent for Comprehensive Academic Paper Search, He

et al.,

- ScholarCopilot: Training Large Language Models for Academic Writing

with Accurate Citations, Wang et al.,

- How deep do large language models internalize scientific literature

and citation practices?, Algaba et al.,

- SCIRGC: Multi-Granularity Citation Recommendation and Citation

Sentence Preference Alignment, Li et al.,

- Towards AI-assisted Academic Writing, Liebling et al.,

- Enhancing academic writing skills and motivation: assessing the

efficacy of ChatGPT in AI-assisted language learning for

EFL students, Song et al.,

- Human-AI collaboration patterns in AI-assisted academic

writing, Nguyen et al.,

- Patterns and Purposes: A Cross-Journal Analysis of AI Tool Usage in

Academic Writing, Xu et al.,

- Divergent llm adoption and heterogeneous convergence paths in research

writing, Lin et al.,

- Artificial intelligence-assisted academic writing: recommendations for

ethical use, Cheng et al.,

4.1.3 Assistance After Manuscript Completion

Grammar Correction- Csed: A chinese semantic error diagnosis corpus, Sun et al.,

- Neural Automated Writing Evaluation with Corrective Feedback,

Wang et al.,

- LM-Combiner: A Contextual Rewriting Model for Chinese Grammatical

Error Correction, Wang et al.,

- Improving Grammatical Error Correction via Contextual Data

Augmentation, Wang et al.,

- How Paperpal Enhances English Writing Quality and Improves

Productivity for Japanese Academics, George et

al.,

- Transforming hematological research documentation with large language

models: an approach to scientific writing and data

analysis, Yang et al.,

- The usage of a transformer based and artificial intelligence driven

multidimensional feedback system in english writing

instruction, Zheng et al.,

- Learning to split and rephrase from Wikipedia edit history,

Botha et al.,

- WikiAtomicEdits: A multilingual corpus of Wikipedia edits for modeling

language and discourse, Faruqui et al.,

- Diamonds in the rough: Generating fluent sentences from early-stage

drafts for academic writing assistance, Ito et

al.,

- Text editing by command, Faltings et al.,

- Wordcraft: A human-AI collaborative editor for story writing,

Coenen et al.,

- Machine-in-the-loop rewriting for creative image captioning,

Padmakumar et al.,

- Read, revise, repeat: A system demonstration for human-in-the-loop

iterative text revision, Du et al.,

- Coauthor: Designing a human-ai collaborative writing dataset for

exploring language model capabilities, Lee et

al.,

- Sparks: Inspiration for science writing using language models,

Gero et al.,

- Techniques for supercharging academic writing with generative

AI, Lin et al.,

- Overleafcopilot: Empowering academic writing in overleaf with large

language models, Wen et al.,

- Augmenting the author: Exploring the potential of AI collaboration in

academic writing, Tu et al.,

- Step-Back Profiling: Distilling User History for Personalized

Scientific Writing, Tang et al.,

- Closing the Loop: Learning to Generate Writing Feedback via Language

Model Simulated Student Revisions, Nair et al.,

- Enhancing Chinese Essay Discourse Logic Evaluation Through Optimized

Fine-Tuning of Large Language Models, Song et

al.,

- Cocoa: Co-Planning and Co-Execution with AI Agents, Feng et

al.,

- Prototypical Human-AI Collaboration Behaviors from LLM-Assisted

Writing in the Wild, Mysore et al.,

- XtraGPT: LLMs for Human-AI Collaboration on Controllable Academic

Paper Revision, Chen et al.,

- The usage of a transformer based and artificial intelligence driven

multidimensional feedback system in english writing

instruction, Zheng et al.,

- Autonomous LLM-Driven Research—from Data to Human-Verifiable Research

Papers, Ifargan et al.,

4.2 Full-Automatic Academic Writing

- The ai scientist: Towards fully automated open-ended scientific

discovery, Lu et al.,

- Agent laboratory: Using llm agents as research assistants,

Schmidgall et al.,

- ScholaWrite: A Dataset of End-to-End Scholarly Writing

Process, Wang et al.,

- Beyond outlining: Heterogeneous recursive planning for adaptive

long-form writing with language models, Xiong et

al.,

- AgentRxiv: Towards Collaborative Autonomous Research,

Schmidgall et al.,

- Zochi Technical Report, AI et al.,

- Carl Technical Report, Institute et al.,

- The ai scientist-v2: Workshop-level automated scientific discovery via

agentic tree search, Yamada et al.,

- Using artificial intelligence in academic writing and research: An

essential productivity tool, Khalifa et al.,

- Human-LLM Coevolution: Evidence from Academic Writing, Geng et

al.,

- Large language models penetration in scholarly writing and peer

review, Zhou et al.,

- And Plato met ChatGPT: an ethical reflection on the use of chatbots in

scientific research writing, with a particular focus on

the social sciences, Calderon et al.,

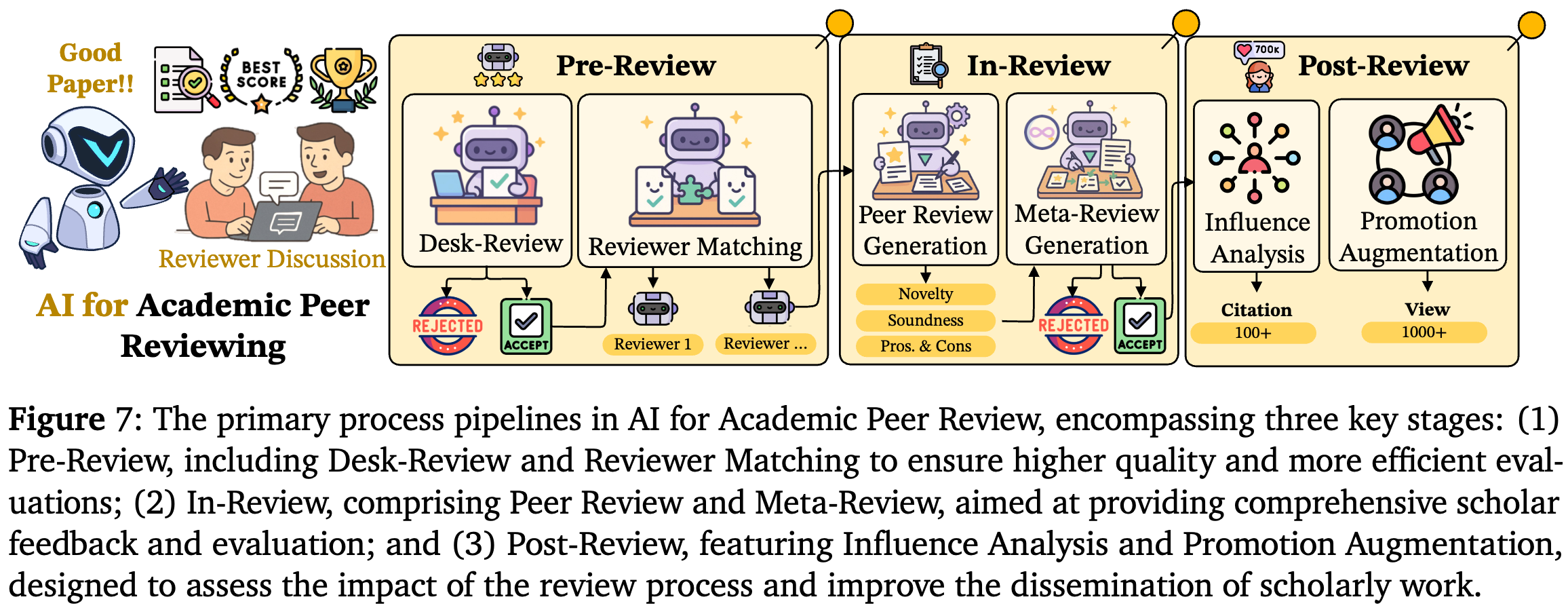

5. AI for Academic Peer Reviewing

- Can we automate scientific reviewing?, Yuan et al.,

- Reviewergpt? an exploratory study on using large language models for

paper reviewing, Liu et al.,

- Unveiling the sentinels: Assessing ai performance in cybersecurity

peer review, Niu et al.,

- Automated scholarly paper review: Concepts, technologies, and

challenges, Lin et al.,

- What Can Natural Language Processing Do for Peer Review?,

Kuznetsov et al.,

- Artificial intelligence to support publishing and peer review: A

summary and review, Kousha et al.,

- Large language models for automated scholarly paper review: A

survey, Zhuang et al.,

- Evaluating the predictive capacity of ChatGPT for academic peer review

outcomes across multiple platforms, Thelwall et

al.,

- A framework for reviewing the results of automated conversion of

structured organic synthesis procedures from the

literature, Machi et al.,

5.1 Pre-Review

5.1.1 Desk-Review

- How to Make Peer Review Recommendations and Decisions, Society

et al.,

- Helping editors find reviewers, Tedford et al.,

- Snapp: Springer Nature's next-generation peer review system,

Nature et al.,

- Matching papers and reviewers at large conferences,

Leyton-Brown et al.,

- Streamlining the review process: AI-generated annotations in research

manuscripts, D{\'\i}az et al.,

- Artificial intelligence in peer review: enhancing efficiency while

preserving integrity, Doskaliuk et al.,

- Enhancing Academic Decision-Making: A Pilot Study of AI-Supported

Journal Selection in Higher Education, Farber et

al.,

5.1.2 Reviewer Matching

- A framework for optimizing paper matching, Charlin et al.,

- The Toronto paper matching system: an automated paper-reviewer

assignment system, Charlin et al.,

- Pistis: A conflict of interest declaration and detection system for

peer review management, Wu et al.,

- An automated conflict of interest based greedy approach for conference

paper assignment system, Pradhan et al.,

- Matching papers and reviewers at large conferences,

Leyton-Brown et al.,

- Autonomous Machine Learning-Based Peer Reviewer Selection

System, Aitymbetov et al.,

- Automated Research Review Support Using Machine Learning, Large

Language Models, and Natural Language

Processing, Pendyala et al.,

- Peer review expert group recommendation: A multi-subject

coverage-based approach, Fu et al.,

5.2 In-Review

5.2.1 Peer-Review

Score Prediction- ALL-IN-ONE: Multi-Task Learning BERT Models for Evaluating Peer

Assessments., Jia et al.,

- The quality assist: A technology-assisted peer review based on

citation functions to predict the paper quality,

Basuki et al.,

- Exploiting labeled and unlabeled data via transformer fine-tuning for

peer-review score prediction, Muangkammuen et

al.,

- RelevAI-Reviewer: A Benchmark on AI Reviewers for Survey Paper

Relevance, Couto et al.,

- Kid-review: knowledge-guided scientific review generation with oracle

pre-training, Yuan et al.,

- Gpt4 is slightly helpful for peer-review assistance: A pilot

study, Robertson et al.,

- Marg: Multi-agent review generation for scientific papers,

D'Arcy et al.,

- Peer review as a multi-turn and long-context dialogue with role-based

interactions, Tan et al.,

- Agentreview: Exploring peer review dynamics with llm agents,

Jin et al.,

- Can large language models provide useful feedback on research papers?

A large-scale empirical analysis, Liang et al.,

- Automated Focused Feedback Generation for Scientific Writing

Assistance, Chamoun et al.,

- The ai scientist: Towards fully automated open-ended scientific

discovery, Lu et al.,

- SEAGraph: Unveiling the Whole Story of Paper Review Comments,

Yu et al.,

- The ai scientist-v2: Workshop-level automated scientific discovery via

agentic tree search, Yamada et al.,

- A Dataset of Peer Reviews (PeerRead): Collection, Insights and NLP

Applications, Kang et al.,

- Peerassist: leveraging on paper-review interactions to predict peer

review decisions, Bharti et al.,

- Marg: Multi-agent review generation for scientific papers,

D'Arcy et al.,

- Peer review as a multi-turn and long-context dialogue with role-based

interactions, Tan et al.,

- Automated review generation method based on large language

models, Wu et al.,

- AI-Driven review systems: evaluating LLMs in scalable and bias-aware

academic reviews, Tyser et al.,

- MAMORX: Multi-agent multi-modal scientific review generation with

external knowledge, Taechoyotin et al.,

- Cycleresearcher: Improving automated research via automated

review, Weng et al.,

- OpenReviewer: A Specialized Large Language Model for Generating

Critical Scientific Paper Reviews, Idahl et al.,

- The role of large language models in the peer-review process:

opportunities and challenges for medical journal

reviewers and editors, Lee et al.,

- PiCO: Peer Review in LLMs based on Consistency Optimization,

Ning et al.,

- Mind the Blind Spots: A Focus-Level Evaluation Framework for LLM

Reviews, Shin et al.,

- Revieweval: An evaluation framework for ai-generated reviews,

Kirtani et al.,

- Automatically Evaluating the Paper Reviewing Capability of Large

Language Models, Shin et al.,

- Deepreview: Improving llm-based paper review with human-like deep

thinking process, Zhu et al.,

- Reviewagents: Bridging the gap between human and ai-generated paper

reviews, Gao et al.,

- Reviewing Scientific Papers for Critical Problems With Reasoning LLMs:

Baseline Approaches and Automatic Evaluation,

Zhang et al.,

- REMOR: Automated Peer Review Generation with LLM Reasoning and

Multi-Objective Reinforcement Learning,

Taechoyotin et al.,

- TreeReview: A Dynamic Tree of Questions Framework for Deep and

Efficient LLM-based Scientific Peer Review,

Chang et al.,

- PaperEval: A universal, quantitative, and explainable paper evaluation

method powered by a multi-agent system, Huang et

al.,

5.2.2 Meta-Review

- Summarizing multiple documents with conversational structure for

meta-review generation, Li et al.,

- Meta-review generation with checklist-guided iterative

introspection, Zeng et al.,

- When Reviewers Lock Horn: Finding Disagreement in Scientific Peer

Reviews, Kumar et al.,

- A sentiment consolidation framework for meta-review

generation, Li et al.,

- Prompting LLMs to Compose Meta-Review Drafts from Peer-Review

Narratives of Scholarly Manuscripts, Santu et

al.,

- Towards automated meta-review generation via an NLP/ML pipeline in

different stages of the scholarly peer review

process, Kumar et al.,

- Metawriter: Exploring the potential and perils of ai writing support

in scientific peer review, Sun et al.,

- GLIMPSE: Pragmatically Informative Multi-Document Summarization for

Scholarly Reviews, Darrin et al.,

- PeerArg: Argumentative Peer Review with LLMs, Sukpanichnant et

al.,

- Bridging Social Psychology and LLM Reasoning: Conflict-Aware

Meta-Review Generation via Cognitive Alignment,

Chen et al.,

- LLMs as Meta-Reviewers' Assistants: A Case Study, Hossain et

al.,

5.3 Post-Review

5.3.1 Influence Analysis

- Popular and/or prestigious? Measures of scholarly esteem, Ding

et al.,

- Measuring academic influence: Not all citations are equal, Zhu

et al.,

- An overview of microsoft academic service (mas) and

applications, Sinha et al.,

- Factors affecting number of citations: a comprehensive review of the

literature, Tahamtan et al.,

- Relative citation ratio (RCR): a new metric that uses citation rates

to measure influence at the article level,

Hutchins et al.,

- HLM-Cite: Hybrid Language Model Workflow for Text-based Scientific

Citation Prediction, Hao et al.,

- From Words to Worth: Newborn Article Impact Prediction with

LLM, Zhao et al.,

- Large language models surpass human experts in predicting neuroscience

results, Luo et al.,

5.3.2 Promotion Enhancement

- From complexity to clarity: How AI enhances perceptions of scientists

and the public's understanding of science,

Markowitz et al.,

- Automatic Evaluation Metrics for Artificially Generated Scientific

Research, H{\"o}pner et al.,

- Stealing Creator's Workflow: A Creator-Inspired Agentic Framework with

Iterative Feedback Loop for Improved Scientific

Short-form Generation, Park et al.,

- P2P: Automated Paper-to-Poster Generation and Fine-Grained

Benchmark, Sun et al.,

- Can we automate scientific reviewing?, Yuan et al.,

- Reviewergpt? an exploratory study on using large language models for

paper reviewing, Liu et al.,

- Unveiling the sentinels: Assessing ai performance in cybersecurity

peer review, Niu et al.,

- Automated scholarly paper review: Concepts, technologies, and

challenges, Lin et al.,

- What Can Natural Language Processing Do for Peer Review?,

Kuznetsov et al.,

- Artificial intelligence to support publishing and peer review: A

summary and review, Kousha et al.,

- Large language models for automated scholarly paper review: A

survey, Zhuang et al.,

- Evaluating the predictive capacity of ChatGPT for academic peer review

outcomes across multiple platforms, Thelwall et

al.,

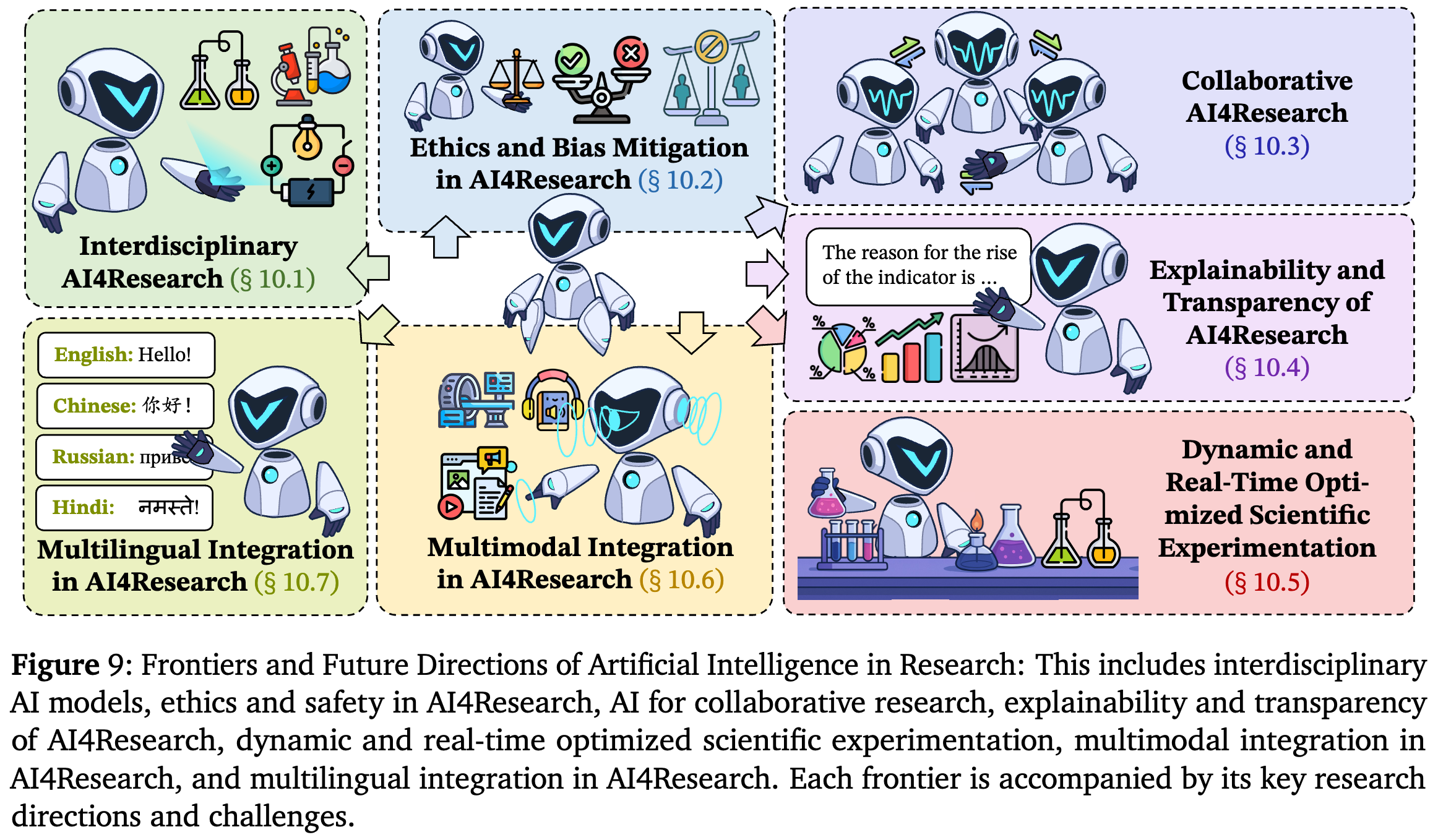

- A framework for reviewing the results of automated conversion of